“Statistical prediction is only valid in sterile laboratory conditions, which suddenly isn't as useful as it seemed before” — Gary King, political scientist and quantitative methodologist

Imagine you arrive on scene of a fire alarm in four-story apartment building with two ride-alongs, Blaise and Pierre, both world-renowned statisticians and project managers of a program called “building watch.” Building watch has installed various sensors in structures across the city to gather data on emergency activity. Excitement shows on Blaise and Pierre’s faces once they recognize the address as one of their buildings. Immediately they open an app on their phone and begin taking notes.

The fire panel shows a fire alarm on the fourth floor. Your crew grabs its gear and starts for the stairs. Suddenly Pierre protests. Based on the data, Pierre claims you should leave the equipment and take the elevator instead of the stairs. “We have all of the data!” Pierre yells. “The average temperature in the building is only 75 degrees. We have been monitoring this building for years, and based on the statistical trends, we have a 99 percent confidence there is no fire. A fire in this building is a five sigma event.”

Your crew ignores Pierre and climbs the stairs. Insistent, Pierre follows, continuing to barrage you with terms like correlation, central limit theorem, interference and regression analysis.

On the fourth floor, as you open the stairwell door to a smoke-filled hallway, Pierre yells one last protest: “It is a near impossibility a fire will happen, and I fear you lack an understanding of Laplace’s laws.”

Just before masking up, you yell back, “Yes, sir, but I fear you lack an understanding of Murphy’s law.”

The exact origin of Murphy’s law—“Whatever can happen, will happen” (Augustus De Morgan, 1866)—is uncertain, but its transcendence through our culture is an endorsement of its truth. Although not universally applicable (your car is not going to turn into a turkey), the philosophy of Murphy’s law has relevance to the emergency management domain. In our ideal world, we expect the best, but in the real world, we plan for the worst and prepare to be surprised.

The idealist vs. the realist

In an ideal world, events happen in an isolated, predictable pattern; all possible variables can be mapped out and weighed against the alternatives, producing precise forecasts. However, in the real world, events are not isolated, patterns are erratic, and countless variables interact, creating unimaginable events, like life itself. The opposing world views of idealism vs. realism has plagued the domain of probability for hundreds of years.

Origins of probability

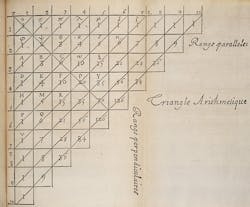

A priori—knowing before the event: In 1653, a young French scholar named Blaise Pascal published “treatise on the arithmetical triangle,” now known as Pascal triangle. Pascal invented a methodology to predict the result of games by mapping out every possible outcome and comparing it to alternative outcomes.

For example, if I asked the odds of rolling a “3” on a six-sided dice, Pascal’s theory is how we calculate the odds are 1 in 6 since “3” is one alternative to six equally likely options. And if I asked the odds of rolling a “1” or “2” on an eight sided dice the odds are 2 in 8. The procedure gets exponentially more complicated as the variables increase, but the process is still the same.

Although brilliant, Pascal’s methods were designed for games played in a sterile and controlled environment. Pascal never intended his work to be applied to the real word. As we see later in his personal life, Pascal used an entirely different approach I will call “risk management.”

Bridging the gap—the ideal to the real

A posteriori—based on past events: Roughly 100 years after Pascal, Pierre-Simon Laplace wanted to make predictions in the real world, specifically the movement of celestial bodies. Lacking the exact knowledge of each variable Pascal enjoyed with his games of chance, Laplace substituted historical data for the known variables of the game. In other words, Pascal knew the number of sides on each dice, whereas Laplace’s method guesses at the number of sides on the dice by observing multiple rolls. But Laplace was not predicting the odds of rolling a “1” on a six-sided dice; he was predicting the odds of the sun rising tomorrow.

Laplace’s work evolved into a complicated domain that requires a measure of confidence in the accuracy of the result based on the quality and size of data, whereas Pascal’s predictions were precisely accurate because he knew all of the variables before the game started.

Laplace is truly a giant amongst scholars, advancing mathematics, statistics, physics and astronomy. However, his methods can be misapplied and overleveraged. Standing on the backs of giants comes with the risk of a long fall.

Incidentally, Laplace calculated the odds of the sun rising tomorrow based on historical data as 99.999999 ….. percent, which is the most confident prediction in the world one can make. However, based on the aging of the sun, there most certainly will be a day when the sun does not rise. Do to Murphy’s law, Laplace’s forecast is at best 99 percent accurate and one day will be 100 percent wrong.

Forecasting using historical data

Imagine you are driving a car with the windshield blacked out, leaving the rear-view mirror your only means of navigation. The rear-view mirror gives clues to the environment, like the width of the road, depth of the shoulder, the slope of the terrain, and patterns in the road. With a lot of effort and a little intuition, you become attuned to the picture behind you, allowing you to choose a comfortable speed based on the trends you notice. A simple, straight road with a forgiving shoulder allows for higher speeds, while a complex, turning road causes you to slow down. But you never go faster than your ability to recover if the road conditions change.

Along your travels, you pick up a slightly burned French statistician, Pierre. Your new passenger is surprised at the amount of effort required to keep the car on the road and offers to help. He identifies a “descriptive data sample” that “highly correlates” to the direction of the road, or in laymen’s terms, he says just simply watch the yellow line painted on the road.

Taking Pierre’s advice, you focus on the yellow line, which is much easier than surveying the entire environment. You relax and enjoy the ride, thanking your new friend.

In a hurry to get to his burns checked at a local clinic, Pierre suggests you drive faster based on his 99 percent confidence that the current trends will continue with a small margin of error. The new speed, based solely on forecasts, is beyond your ability to recover if the road should turn dramatically. An instinctive fear creeps over you as the car accelerates and Pierre's dazzling math surpasses your judgment.

From financial to fire service forecasting

The forecasting model Value at Risk (VaR) is largely blamed for the extent of the financial meltdown in 2006. In a nutshell, VaR summarizes a financial firm’s cumulative risk into one metric based on probability, creating an elegant, precise measurement of risk that is deeply flawed. In the words of hedge fund manager David Einhorn, VaR is “potentially catastrophic when its use creates a false sense of security among senior executives.”

VaR’s potential to mislead with forecasts came under such fire that risk manager and scholar Nassim Taleb testified before Congress demanding the banning of VaR from financial institutions. In fact, in an act of intuition, Taleb predicted the damage caused by overleveraged forecasting models publicly in 1997, claiming that VaR, “Ignored 2,500 years of experience in favor of untested models built by non-traders” who “gave false confidence” because “it is impossible to estimate the the risks of rare events.”

Despite their shortcomings, VaR-type forecasting models are in the fire service. It started with the 1972 RAND Institute report “Deployment Research of the New York City Fire Project,” an impressive display of predictive analytics suggesting the closure of fire stations. “Convinced that their statistical training trumped the experience of veteran fire officers,” RAND spent years building computer models to predict fires, and implemented station closures based on the analytics. The result was numerous uncontrolled fires and more than 600,000 people displaced from burnt homes. FDNY let dazzling math surpass their judgment. Forecasting is like a chainsaw: It’s easy to use, hard to use well and always dangerous.

As cities such as Atlanta and Cincinnati dive into predictive analytics, New York is taking another swing at it with FireCast, “an algorithm that organizes data from five city agencies into approximately 60 risk factors, which are then used to create lists of buildings that are most vulnerable to fire.” Ranking building-based vulnerability seems reasonable, but once you start to forecast events in a complex system, challenges arise.

Think of the difficulty of forecasting the winner of Game 7 in the 2016 World Series. Relatively speaking, this is a simple problem—you only have 50 participants playing a defined game for a limited time. Your participants all have plenty of descriptive and predictive data from a relatively sterile environment. Yet the outcome of the game was largely unknown until the end.

Contrast the simple baseball problem to forecasting the complex behavior of a city with 1 million residents over a limitless time frame. We have taken a model stretched to its limits with a simple system and tried to expand it beyond imagination to fit a complex system.

Complex systems vs. simple systems

Probability works, forecasting works … in the right domain with simple systems. The Cynefin framework defines simple systems as the “known knowns.” Like the baseball game, simple systems are stable with identifiable, repeatable and predictable causes and effects, such as predicting mileage on a fire engine over the next 5 years. In simple systems, you study the history, forecast the future, and plan.

This is opposed to complex systems, in which cause and effect are only detectible in hindsight. These systems are inheritably unpredictable due to the massive numbers of variables and influences. This is where Murphy’s law comes from. Simple systems are easy to dissect and understand, but complex systems “are impervious to a reductionist, take-it-apart-and-see-how-it-works approach because your very actions change the situation in unpredictable ways.”1 In complex systems, probability is limited, forecasting creates a false sense of confidence, and Murphy’s law reigns true.

Cities are complex systems. Human behavior is a complex system. Fires starting in cities driven by human behavior are exponentially complex. Be very skeptical of anyone with a mathematical model claiming to forecast the future of a complex system who can’t confidently forecast the winner of the next Super Bowl.

Wisdom first

“All models are wrong; some are useful.” — George Box, statistician

Probability is a brilliant methodology originally designed for sterile games of chance, evolving into a dazzling mathematical domain. Probability works in the proper context, but can produce deadly results if it is overleveraged. In the words of author Charles Wheelan, “Probability doesn’t make mistakes; people using probability make mistakes.”

The finance industry and the fire service have been burned by overleveraged forecasting models. The math is amazing, the results are elegant, but the consequences are catastrophic if they are applied to the wrong domain. Don’t let the math surpass your wisdom.

Reference

1. Stewart, T.A. “How to think with your gut.” Business 2.3 (2002): 11.

About the Author

Eric Saylors

Eric Saylors is a captain with the Sacramento, CA, Fire Department (SFD). His 20 years of fire service training and experience spans many areas of public safety, including policy creation, policy analysis, program implementation, project management, EMS, hazardous materials, special rescue, high-rise operations and planning for large-area disasters. Saylors has pioneered and managed many projects within the fire service, including the SFD’s staffing software, records management system, payroll interface, CAD interface to electronic health records, roll call policy, and internal change control policy. He has a bachelor’s degree in finance from Sacramento State University and a master’s degree from the Naval Post-Graduate School through the Center for Homeland Defense and Security.